Research

My research interests lie in the intersection of machine learning, audio processing, and HCI. I’ve been researching how to build AI models for sound recognition with less human annotation effort.

Talk: Overview of my PhD research

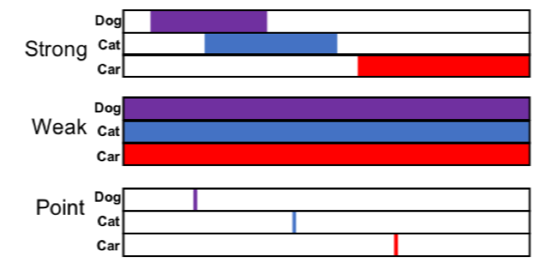

Sound Event Detection using Point-labeled Data

Collecting point labels takes less human time than strong labels. A SED model trained on point labeled audio data is comparable to a model trained on strongly labeled data. [paper]

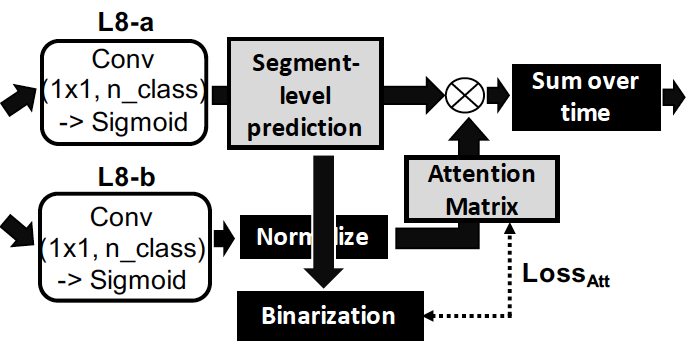

Self-supervised Attention Model for Weakly Labeled Audio Event Classification

We describe a novel weakly labeled Audio Event Classification approach based on a self-supervised attention model. [paper]

Improving Content-based Audio Retrieval by Vocal Imitation Feedback

You can improve audio search resultsjust by imitating sound events you do or do not want in the search result. [paper]

A Human-in-the-loop System for Sound Event Detection and Annotation

The human-machine collaboration speeds up searching for sound events of interest within a lengthy audio recording. [paper] [talk at IUI19] [demo video] [code]

[read more]

Vocal Imitation Set: a dataset of vocally imitated sound events using the AudioSet ontology

The largest publicly-available datset of vocal imitations as well as the first to adopt the widely-used AudioSet ontology for a vocal imitation dataset. [paper] [dataset]

Lossy Audio Compression Identification

We can identify the lossy audio compression formats (e.g., WMA, MP3, AAC etc) only by analying a compressed audio signal without any metadata. [paper]

Speeding Learning of Personalized Audio Equalization

Just rate how much you like audio examples provided by the machine. It will give you the equalization curve of your sound contept. [read more]

Probabilistic Prediction of Rhythmic Characteristics in Markov Chain-based Melodic Sequences

A Markov Chain-based interactive improvisation system which allows a user to control the level of syncopation and rhythmic tension in real-time. [read more]

I.M.Hear

I.M.Hear is a tabletop interface that uses mobile devices as UI components. Position of each mobile device on the table is tracked down in real time only using acoustic signals in “theoretically audible, but practically inaudible range. [read more]

Where Are You Standing?

Where Are You Standing? is an interactive, collaborative mobile music piece using smartphones’ digital compass. [read more]

Turning Into Sound

Turning Into Sound is an interactive multimedia installation. People can make music by drawing on a white board with three color pens. [read more]

Dance performance with mobile phone-Don’t Imagine

Don’t Imagine is a new media dance performance. I participated as a multimedia engineer to develop mobile-phone based interactive music controllers for dancers. [read more]