I.M.Hear

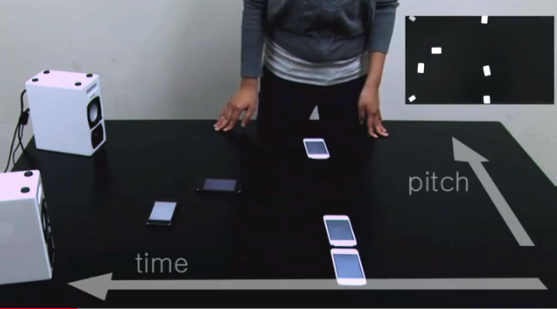

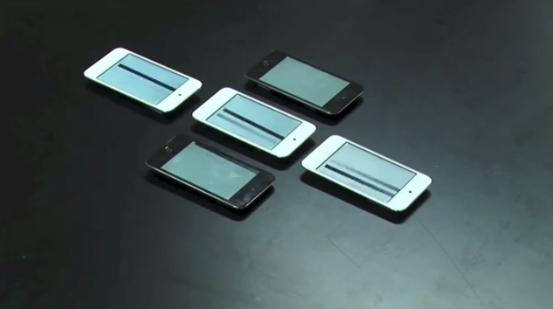

I.M.Hear is a tabletop interface with mobile devices. The interface is based on the TAPIR Acoustic Locator (TAL) – a location system for mobile devices using barely audible sound: positions of the mobile devices on the table are tracked down in real time with acoustic signals only. There is a virtual scan line (moving from left to right) on the surface of the table; each device displays part of the scan line when it is “touched” (as shown in the video below) to provide visual feedback to the user. I participated in this project as a sound designer. Surrounding sounds, such as ambient noise or the users’ voice, are recorded through an external microphone and manipulated/re-arranged using Max/MSP to produce their pitch-shifted versions.

- Software: Max/MSP, Processing, I.M.Hear app (on iOS, written by Seunghun Kim)

Demo video

Related papers

- Seunghun Kim, Bongjun Kim, and Woon Seung Yeo, “IAMHear: A Tabletop Interface with Smart Mobile Devices using Acoustic Location,” Conference on Human Factors in Computing Systems (CHI) works in progress, Apr. 2013.